Introduction

The article will represent FPT Software's approaches and solutions to implement and apply detection-as-code strategies to create an automated solution that streamlines SIEM management across multiple environments using CI/CD pipelines.

The article will follow this stated outline:

- Why Detection as Code: Traditional Workflow.- Putting Detection as Code into practice.

- Integrating CI/CD into the infrastructure: Automating rules testing and uploading.

Tools

To create detection rules:

- Rule format: Sigma

- Rule converter: pySigma, sigma-cli

- Sigma backend: splunk, ala-sifex

SIEMs: Splunk Enterprise, Microsoft Sentinel

Code Repository and CI/CD Pipeline: Azure DevOps

Why is "Detection as Code": Traditional Workflow

Traditional Workflow

Security Information and Event Management (SIEM) tools play an important role in the Security Operations Center (SOC) systems of many worldwide organizations, functioning at its most basic level to collect log data and collate it into a single, centralized platform. These SIEM tools enable threat detection, highlighting suspicious and malicious activities, real-time event monitoring, and security data logging for compliance.

However, the standard operating procedure for managing (SIEM) systems involves tedious processes. Traditionally, analytics rules are manually created and configured in each environment, often by security analysts or SIEM engineers. And this approach seems to be not a good practice for a couple of reasons:

- Lack of scalability: As the number of environments increases, it becomes increasingly challenging to manage and maintain rules manually.

- Inefficiency: Manually creating and updating rules can be time-consuming and hinder the productivity of security teams.

- Inconsistency: Manually configured rules across different environments may not be consistent.

- Difficulty in tracking changes: Without a version control system, it's hard to keep track of what changes were made, by whom, and when.

- Increased risk of human error: Manual configuration increases the risk of mistakes, which could lead to gaps in threat detection.

Detection as Code

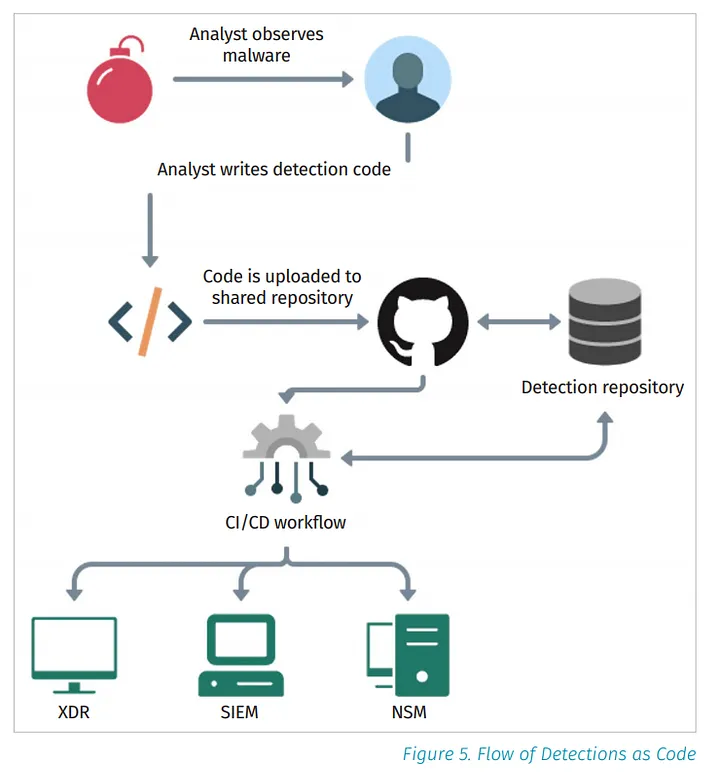

"Detection as Code" represents a paradigm shift in security operations. It's a methodology that treats security configurations and policies like software code. This means that instead of manually configuring rules and detection strategies, one can write code that defines these rules. This code can be version-controlled, tested, and deployed automatically, like any other software application. You can check out the following article for more detailed information about the concept of “Detection as Code” by Anton Chuvakin.

Detection as Code in Practice

Nowadays, numerous Security Information and Event Management (SIEM) tools are available, which play a vital role in an organization's security operations. However, a significant issue is the variety of SIEM tools, which makes it challenging to share detection content with partners who use different SIEM tools. Translating queries from one type to another is not sustainable, and defensive cybersecurity needs to improve how we share detections to keep up with the ever-evolving threats from adversaries. This is where we can exercise "Sigma" and "pySigma".

Sigma and pySigma

The term "Sigma" was first introduced by Florian Roth and Thomas Patzke in 2017, and it has become widely known as a defender's savior due to its advantages.

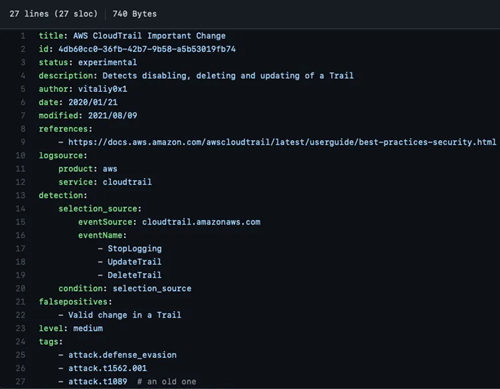

Sigma is a generic and open signature format that enables you to describe relevant log events straightforwardly. In practical terms, Sigma equips us with a universal detection rule template that seamlessly integrates with any SIEM and log management platform. This implies that every rule adheres to a consistent structure, streamlining the process for security analysts. They can employ a converter to seamlessly translate open-source detections into a format compatible with their specific security system. You can find more information about how to create the Sigma rule on the official site: About Sigma | Sigma Website (sigmahq.io).

Sample of a typical detection rule

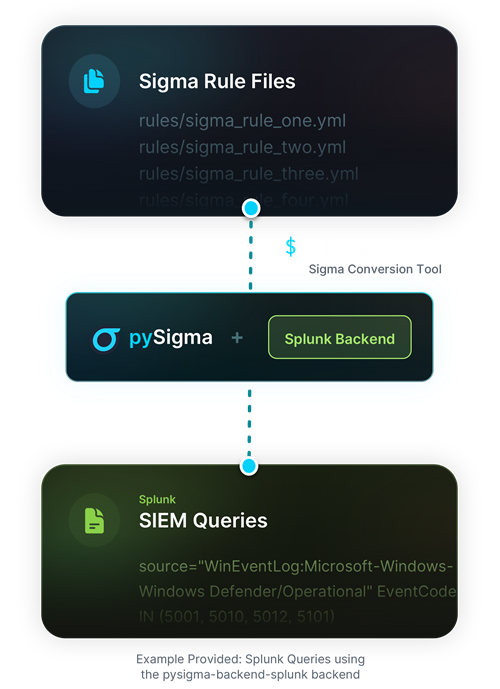

As mentioned earlier, manually converting Sigma rules into other query languages is a pain as it requires expertise in all other query languages. Fortunately, the newly developed pySigma framework saved the day for every security analyst after the deprecation of Sigmac. In short, the pySigma framework provides an API for each Sigma backend to perform conversion, transformation, and formatting of every Sigma rule.

Sigma in practice

To convert the detection rules to an SIEM-compatible query using Sigma, you need to install the sigma-cli and pySigma. pySigma sounds familiar, but what about sigma-cli?

Sigma CLI is a locally hosted Python program that will analyze, check, and convert your Sigma Rules from YAML to your chosen format. This tool does the heavy lifting for you, however, it will only output what you input into the program. There are several terms related to this so I will quickly introduce them to you:

Backend: Backend refers to an API that helps convert Sigma rules to a compatible SIEM query.

Pipeline: Pipelines (or "processing pipelines") provide a more nuanced way to configure and fine-tune how Sigma rules get converted into their SIEM-specific format. Pipelines are often used to ensure that the fields used within Sigma are mapped correctly to the fields used in each SIEM or to ensure that the correct logsource is being inserted/updated.

After the installation section is completed, we will provide a detailed explanation of the backend operations. As the project's primary goal is to manage detection rules for multiple SIEMs, we must install Splunk and Sentinel backend for the blog post.

The Splunk backend for Sigma is a plugin that allows Sigma rules, written in the Sigma language, to be translated into a format compatible with Splunk. Once the Splunk backend is installed, it provides functionality to convert and deploy Sigma rules in a way that Splunk can interpret and utilize for threat detection and log analysis. This integration helps streamline the process of creating and managing detection rules in Splunk using the Sigma format.

The Sentinel backend for Sigma is a plugin that supports integration with the Sentinel platform. Sentinel is a cloud-native SIEM service provided by Microsoft Azure. Like the Splunk backend, the Sentinel backend allows Sigma rules to be translated into a format compatible with Sentinel.

Deploying Sigma Rule Alerts: A Dual Integration with Splunk and Sentinel

Splunk

Once we have created detection rules and converted them using the Splunk backend, the next step is to generate alerts based on the converted detection rules and upload them to Splunk. Splunk provides REST API functions that enable us to either run searches or manage objects and configurations.

To call Splunk REST API, you first need to install Splunk Enterprise edition and have it running in the background. After that, you are required to authenticate yourself using user authentication API.

The session key returned from the authentication step will be used to authorize the user for the alerts upload API. To upload alerts, we recommend checking out the API documentation for more request parameters.

// Example request

curl -k -u admin:chang2me https://fool01:8092/services/saved/searches/ \

-d name=test_durable \

-d cron_schedule="*/3 * * * *" \

-d description="This test job is a durable saved search" \

-d dispatch.earliest_time="-24h@h" -d dispatch.latest_time=now \

--data-urlencode search="search index="_internal" | stats count by host" \

Sentinel

Initially, our plan was to employ the Microsoft Graph API for managing Sentinel alerts. However, we soon discovered that Microsoft Graph API does not support such functionalities. Consequently, we shifted our focus to using Microsoft's Management API, a vital tool for uploading alerts to our customers' Sentinel systems. This process mandates user authentication via the command line.

az login --service-principal -u {app_id} -p {password} --tenant {tenant}

Once authenticated, the user receives an access token, which is needed for verifying their permissions to access Azure resources and execute specific actions. With the necessary authorization, users can effectively employ Microsoft's APIs to create and upload detection rules to Sentinel. For detailed information about the API's request body and further instructions, please refer to the accompanying documentation.

// Sample request to create a scheduled alert rule

PUT https://management.azure.com/subscriptions/d0cfe6b2-9ac0-4464-9919-dccaee2e48c0/resourceGroups/myRg/providers/Microsoft.OperationalInsights/workspaces/myWorkspace/providers/Microsoft.SecurityInsights/alertRules/microsoftSecurityIncidentCreationRuleExample?api-version=2023-02-01

{

"etag": "\"260097e0-0000-0d00-0000-5d6fa88f0000\"",

"kind": "MicrosoftSecurityIncidentCreation",

"properties": {

"productFilter": "Microsoft Cloud App Security",

"displayName": "testing displayname",

"enabled": true

}

}

Integrating CI/CD to the infrastructure: Automating rules testing and uploading

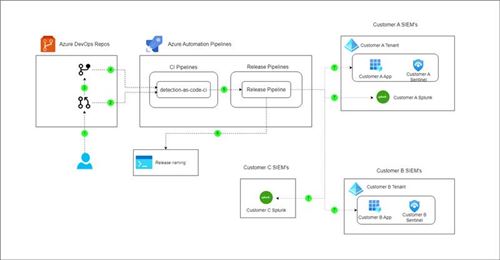

We have already tackled some issues, like translating Sigma queries to be compatible with SIEM, setting up alerts based on converted detection rules, and fixing minor errors in rule validation. However, these are just the beginning, and we still have more work to do. To improve our project's practicality, we need to incorporate a CI/CD pipeline. By doing so, we can automate the integration, testing, and deployment processes, guaranteeing that updates and new features are seamlessly integrated and thoroughly validated. Ultimately, this will result in a more efficient and reliable solution. For our project, we plan to use Azure DevOps for Version Control System and CI/CD Pipelines. Here is a brief overview of our architecture:

The architecture can be broken down into the following steps:

- After familiarizing yourself with Sigma rule syntax, write Sigma detection rules in the rules folder. Please check out the official Sigma rule specification to write good Sigma rules and adhere to best practices/conventions. Most of the time, you will write your rule in the master folder, where your rule will be shared across environments. If you want to write rules for specific customers only, write rules in the customer’s subfolder. If you are not yet confident in the rule or the rule is still unstable, leave the rule in the inactive subfolder, otherwise, leave it in the active folder to upload it automatically.

// folder architecture

rules

-> customer_a

-> active

-> inactive

-> customer_b

...

-> master

...

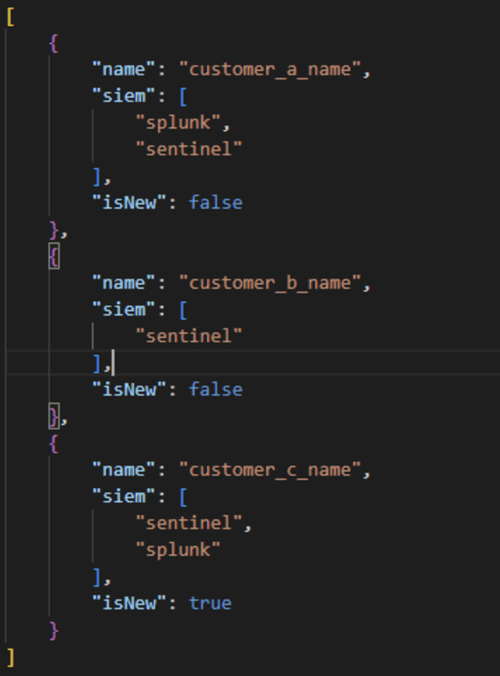

- Remember to create the config file customer.json. That’s where we save the customer's name and SIEMs so that the master knows what environment to upload to. The “isNew” property is used to know if the customer needs to upload rules from the master folder. When “isNew” is set to true then it will upload rules from the master folder and otherwise. The limitation is that the property won’t change to false once the master rules are uploaded so you need to change it manually afterwards.

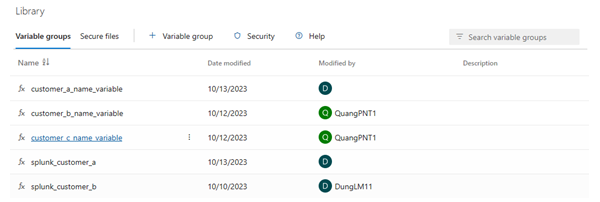

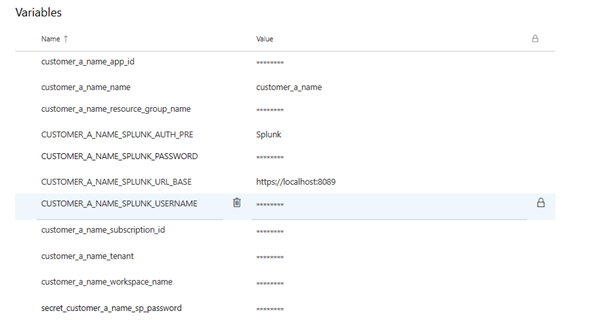

- Now go over in your Azure DevOps Pipeline, and make a variable group for each customer to save their environment variables. We will use these credentials to deploy rules later.

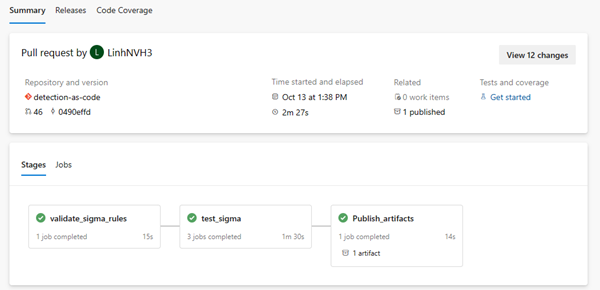

- Now simply push your rules changes to a branch, and make a pull request, your code will go through the CI pipeline to validate your rule. If rules fail to pass validation then you will need to rewrite them according to the Sigma Specification:

- If validation is passed, your rules changes can now be merged into the main branch. After the merge, they will be built and published into artifacts and tagged with SIEMs involved in new changes.

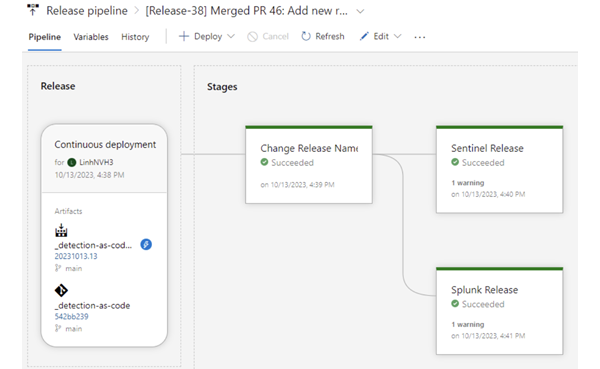

- After artifacts are published and tagged accordingly, the release pipeline will be triggered. The first stage will rename the Release to the desired format for easier tracking. The next stages are deployment stages and will run based on artifact tags. They will upload and edit rules in the customer’s environment, or disable inactive rules.

- Now you should check if your rules are successfully uploaded to the SIEMs. If rules need to change, simply edit the rule and repeat the above step. However, rules that have too many false positives or are simply wrong and unstable, should be moved from the active to inactive folder, then follow the above steps to disable the rule to check it further before uploading.

Limitations

- PySigma Limitation: Since the current version of AzureBackend is not capable of validating rule convention, we need to consider creating a new backend to convert rules or modifying the current one.

- CICD Security: The entire process heavily depends on the CI/CD pipeline for validation, merging, building, and publishing the rules. Any CI/CD pipeline issues or compromise could disrupt the entire process. We have already applied some of the best practices to protect our CICD like pipeline variables/secrets. But there is still work to do including securing deployment credentials using Azure KeyVault and securing Azure resources following RBAC best practice.

- SIEM Connectivity: If the SIEM is not accessible from the Azure DevOps, we cannot connect and integrate with it. In this situation, we should consider utilizing the Agent Pool service of Azure DevOps.

Agent Pool: An agent pool is a collection of agents. Instead of managing each agent individually, you organize agents into agent pools. When you configure an agent, it is registered with a single pool, and when you create a pipeline, you specify the pool in which the pipeline runs. When you run the pipeline, it runs on an agent from that pool that meets the demands of the pipeline.

Conclusion

Despite encountering limitations with PySigma's validation and the need for enhanced CI/CD security, a foundation for improving SIEM management practices was successfully established. By adopting "Detection as Code" and leveraging CI/CD pipelines, organizations can enhance threat detection, reduce manual effort, and improve security. FPT Software believe that implementing detection-as-code strategies and integrating CI/CD pipelines are crucial for modernizing security operations and effectively mitigating cyber threats.